In September 2020, a documentary film called The Social Dilemma was launched on Netflix, and became one of the most watched and most notable films of the year.

The Social Dilemma demonstrates how social media platforms are not only designed to consume your time and attention, but also to constantly learn more about you. By gathering information about you and keeping you engaged as a regular user, ultimately the platform can sell ads to be shown to specifically to you (and people who share some traits with you).

Whether you use Facebook, Twitter, Instagram, YouTube, or some other social media platforms, the principles are essentially the same: all these platforms use a targeted advertising model in order to make model whilst making the platform available to users at no monetary cost. The message of the film is that using these platforms can have non-obvious costs: from increasing anxiety to influencing elections.

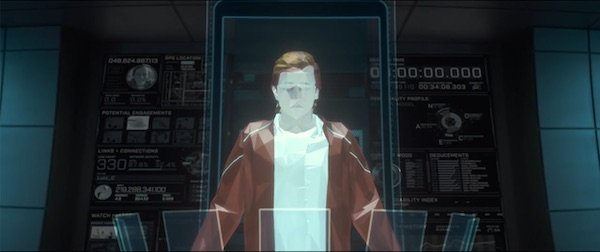

The film uses the docu-drama format: the themes of the film are explained in-part by a drama story, which shows the impact of heavy social media usage on a family. It also shows how a social media network targets content and adverts at each individual: this is demonstrated by three anthropomorphised AI algorithms which interact with each other in a control room.

The story is interspersed with interviews from a number of individuals, many of whom have left big tech companies because of the concerns they had with the business model. For me, this was the real highlight of the film: bringing together so many voices who have spoken out about this into one documentary.

The film left me wanting to know more about these individuals, their stories, and what they are doing now. So I did some research, and in this post I will share what I’ve learned.

I’ve arranged the interviewees in the film into three groups: The Center for Humane Tech Crew, The Reformed Big-Tech Insiders, and The Academics, Researchers, and Authors.

The Center For Humane Tech Crew

The Center For Humane Tech features prominently in the film. This non-profit has been raising awareness of the issues in the film for a number of years, but The Social Dilemma is perhaps the best publicity they have had for their message.

We were all looking for the moment when technology would overwhelm human strengths and intelligence. When is it gonna cross the singularity, replace our jobs, be smarter than humans? But there’s this much earlier moment… when technology exceeds and overwhelms human weaknesses.

Tristan Harris

The star of the show is Tristan Harris, co-founder & president of The Center For Humane Technology. A former Google employee, Harris has become a figurehead of the movement raising concern about the distracting and addictive nature of smartphones and social networking platforms.

Harris therefore has a special prominence in this film, not only as an interviewee, but he is also filmed in the lead-up to a speech and during that speech, in which he makes the argument that technology has advanced to a stage that it overpowers us and controls us, not yet all the time, but when we are at our weakest.

Advertisers are the customers. We’re the thing being sold.

Aza Raskin

The two less prominent co-founders of The Center For Humane Technology also feature in the film. Whilst running Humanized, a user interface consultancy, Aza Raskin invented the concept of infinite scroll, now used by so many social networks, apps, and websites to show you an endless stream of content. He introduced infinite scroll to Google at a talk in 2008, and now regrets introducing it to the world, though it seems inevitable that someone would have done it sooner or later.

Perhaps the most dangerous piece of all this is the fact that it’s driven by technology that’s advancing exponentially.

Randima (Randy) Fernando

Randy Fernando also has a background in technology, having worked for some years for the computer graphics hardware company Nvidia. However, having been introduced to meditation as a child, this also influenced his career, when he later ran Mindful Schools, and nonprofit teaching meditation to kids. Finally these interests were combined when he co-founded the Center For Humane Technology.

There’s no one bad guy

Lynn Fox

Lynn Fox has worked in communications for several large tech firms including Apple, Google, and Twitter. She now manages communications for the Center For Humane Technology – and this seems to be the main reason she features in the film – but she has clearly done a great job raising the profile of the Center For Humane Technology!

The Reformed Big-Tech Insiders

Along with Harris, a number of other ex-employees of big tech firms also feature in the film.

When we were making the like button, our entire motivation was, “Can we spread positivity and love in the world?” The idea that, fast-forward to today, and teens would be getting depressed when they don’t have enough likes, or it could be leading to political polarization was nowhere on our radar.

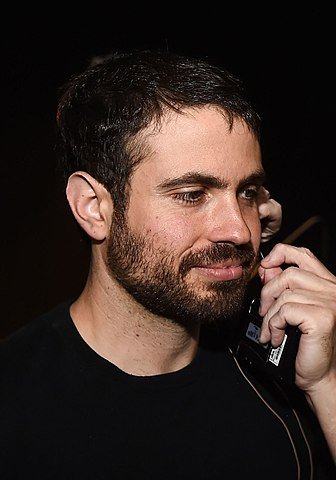

Justin Rosenstein

Justin Rosenstein (now co-founder of Asana) worked at Facebook when he and teammates invented the Like button. He has since spoken out about the addictive nature of social media.

What I want people to know is that everything they’re doing online is being watched, is being tracked, is being measured.

Jeff Seibert

Jeff Seibert was Head of Product at Twitter, after a company he co-founded, Crashyltics was acquired by Twitter (it was since sold on to Google). In the film, he discusses how tagging other users is encouraged to maximize interaction time, since users are far more eager to respond to human actions rather than purely algorithmic notifications.

And over time, by running these constant experiments, you… you develop the most optimal way to get users to do what you want them to do. It’s… It’s manipulation.

Sandy Parakilas

Sandy Parakilas worked in data protection at Facebook, and has since become a whistleblower, explaining to a UK parliament committee recently that user’s data was not safeguarded to the same standards as Facebook’s internal network security.

I don’t think these guys set out to be evil. It’s just the business model that has a problem.

Joe Toscano

Joe Toscano worked as an Experience Design Consultant for Google who left his role due to ethical concerns in the industry. His 2018 book, Automating Humanity, covers much of the same territory as this film.

Rewind a few years ago, I was the… I was the president of Pinterest. I was coming home, and I couldn’t get off my phone once I got home, despite having two young kids who needed my love and attention.

Tim Kendall

Tim Kendall worked for Facebook as the Director of Monetization in the early years. As such, he helped develop the advertising model which Facebook now uses to generate revenue. Later, he was president of Pinterest for a number of years, before he quit to launch a start-up fighting tech addiction. The outcome of this is the Moment app which helps users understand and control screen time.

The algorithm has a mind of its own, so even though a person writes it, it’s written in a way that you kind of build the machine, and then the machine changes itself.

Bailey Richardson

Bailey Richardson is known for quitting Instagram – not so unusual, except she was an early employee at Instagram. She was disappointed with the introduction of algorithms which aim to maximise usage time.

The flat-Earth conspiracy theory was recommended hundreds of millions of times by the algorithm

Guillaume Chaslot

Guillaume Chaslot worked as a Google engineer on YouTube recommendations. He developed concerns about how through optimising for watch time, algorithms end up inadvertently recommending mindless videos, and worse, fake news. Before he left, he claims to have proposed an improved algorithm optimising for quality which was swiftly dismissed (though Google disputes his claims).

The bigger it gets, the harder it is for anyone to change.

Alex Roetter

Alex Roetter was Senior VP of Engineering at Twitter. He felt like Twitter was for good in the world, but now he’s no longer convinced of that.

The Academics, Researchers, Authors

It’s the gradual, slight, imperceptible change in your own behavior and perception that is the product. … That’s the only thing there is for them to make money from. Changing what you do, how you think, who you are. It’s a gradual change.

Jaron Lanier

An eccentric and fascinating character, Jaron Lanier was a pioneer of Virtual Reality in the 1980s, and he is also an accomplished musician. The reasons for his being featured in this documentary are those expressed in more detail in his 2018 book, Ten Arguments for Deleting Your Social Media Accounts Right Now.

They have more information about us than has ever been imagined in human history. It is unprecedented.

Shoshana Zuboff

Shoshana Zuboff is a Harvard professor and author, known for inventing the term “surveillance capitalism”. Her 2019 book “The Age of Surveillance Capitalism” describes this concept of the business model which has emerged for online services.

In Myanmar, when people think of the Internet, what they are thinking about is Facebook

Cynthia M. Wong

Cynthia M. Wong worked as senior internet researcher at Human Rights Watch. In the film, she discusses how the ubiquity of Facebook in Myanmar allowed misinformation to spread, contributing to the persecution of the Rohingya Muslim minority in the country. She is now trying to change things from the inside, working on Human Rights at Twitter.

So, here’s the thing. Social media is a drug.

Anna Lembke

Dr. Anna Lembke is Medical Director of Addiction Medicine at Stanford University School of Medicine. She has studied smartphone and technology addiction as well as better recognised addictions such as drug and alcohol addiction. Her new book, Dopamine Nation: Finding Balance in the Age of Indulgence will be published later in 2021.

Make sure that you get lots of different kinds of information in your own life. I follow people on Twitter that I disagree with because I want to be exposed to different points of view.

Cathy O’Neil

Cathy O’Neil is a mathematician, data scientist, and author. Her book “Weapons of Math Destruction” is about the social impact of algorithms, and how they can reinforce existing inequalities and discrimination.

We all simply are operating on a different set of facts. When that happens at scale, you’re no longer able to reckon with or even consume information that contradicts with that world view that you’ve created.

Rashida Richardson

Rashida Richardson is a lawyer and researcher interested in the social and legal implications of “Big Data” and data-driven technologies. She testified in a Senate Hearing on Technology Companies and Algorithms alongside Tristan Harris.

Before you share, fact-check, consider the source, do that extra Google. If it seems like it’s something designed to really push your emotional buttons, like, it probably is.

Renée DiResta

Renée DiResta is the technical research manager at Stanford Internet Observatory. She investigates the spread of malign narratives across social networks.

There has been a gigantic increase in depression and anxiety for American teenagers which began right around… between 2011 and 2013

Jonathan Haidt

Jonathan Haidt is a social psychologist known for creating Moral Foundation Theory, which explains how people can see the world in very different ways depending on the moral foundations which they prioritise. His recent interview with Freddie Sayers is well worth watching, in which they discuss how right wing and left wing views are influenced by the moral foundations and how this spectrum may have changed in recent years. In The Social Dilemma, Haidt shares his concerns about how the generation growing up with smartphones and social media has anxiety and risk-aversion.

The Russians didn’t hack Facebook. What they did was they used the tools that Facebook created for legitimate advertisers and legitimate users, and they applied it to a nefarious purpose.

Roger McNamee

Roger McNamee is a businessman & investor who made an early investment in Facebook. However, in recent years he has been critical of Facebook’s impact on society and democracy.

The right voices?

It is notable that the voices in this documentary are very much centred in Silicon Valley. This is perhaps unsurprising, when many of these individuals worked at big tech firms such as Facebook, Google, and Twitter.

Having Silicon Valley insiders raise awareness of the problems created by social media platforms built in Silicon Valley is poetic and effective, lending credibility to the message of this documentary.

However, when it comes to solving these problems, isn’t it healthier to look outside of Silicon Valley networks? If this documentary represents a kind of transformation of consciousness in Silicon Valley, that is of course very welcome. But for the rest of the world – if the problem is as serious as stated in The Social Dilemma – we should not wait for Silicon Valley to provide the change required.

We can connect with others in our local networks and build alternatives to centralised Silicon Valley-based services.

Of course, not everyone has the time, skillset, or experience to contribute to building these alternatives – but in being aware of the problems of social media, we can all take a look at our own behaviour and start improving our digital habits, and start using alternative, more humane social media services as they emerge.

Justin Emery,

I happened onto your site, tho I am about as far from being a techie as possible. I am a dyslexia specialist and work with children who struggle mightily to read. Over the 40 years of working with children, I’ve seen substantial changes in teenagers’ ability to construct moral arguments or defend their own thinking. This is alarming. You are a wise fellow and, obviously, have trained your mind to probe multiple aspects of causation for social phenoms we experience today. I enjoy your well reasoned thinking and applaud your responsive sharing of your own reading. Please continue this insightful format, sharing broad perspectives and provoking others to do the same. I will revisit your site and will share it with others.

Dear Carol, thank you for sharing your experience and words of encouragement. As you may have noticed I have not posted for some time but hope to get back to it soon when conditions in my life allow me to make space for it.